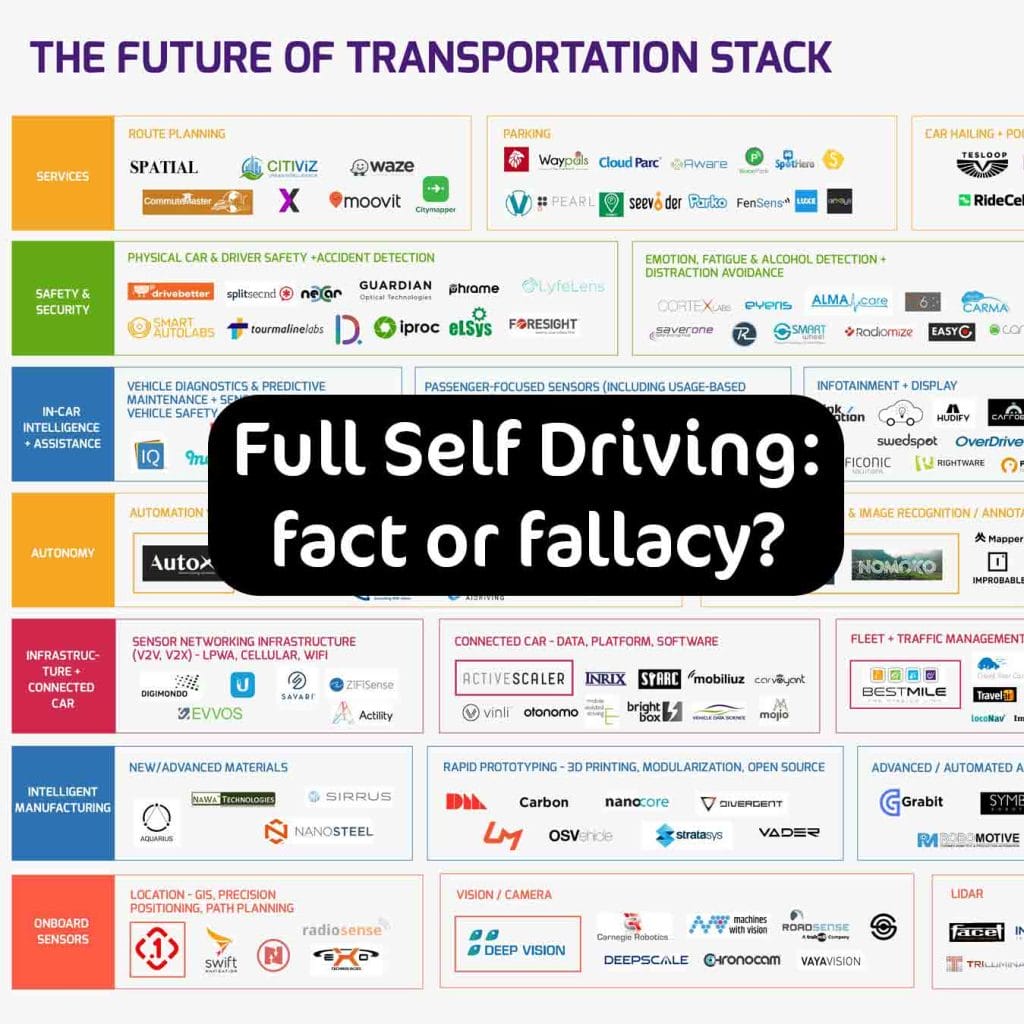

The term Full Self Driving or FSD is thrown around by Tesla like it was an easily implemented add-on to a kitchenware appliance. With all the bravado of a carnival barker, the EV manufacturer espouses its beta driving software as a near fully functioning answer to all our transportation prayers.

However, as more videos are released of FSD engaged EVs swerving toward cement pillars and oncoming traffic, as well as running red lights and phantom braking, questions are being raised about the validity of FSD, the safety of its deployment and ethics of using the public as guinea pigs. Is FSD real, if not, will it ever be? And what are the real-world implications of letting your car drive itself?

To answer these poignant questions, we must spread our wings to encompass the world of Autonomous Vehicles (AVs). Proponents of AV technologies shout that AVs will require less fuel, less land area for parking, will provide roadway optimization, more efficient goods deliveries, and transform the way we use our vehicle space as well as eliminate the need for the traditional driver’s license.

What is autonomous driving?

According to a white paper penned by the Society of Automotive Engineers (SAE), there are six levels of autonomy for vehicles.

- Level 0: No automation, the driver is entirely in control of the vehicle’s movement, including steering, braking, acceleration, parking, and whatever other maneuvering the vehicle is capable of.

- Level 1: The vehicle aids in steering, braking and acceleration, but the driver must be prepared to take control at any time for any reason. For instance, adaptive cruise control would be considered level 1.

- Level 2: Also known as Highway Driving Assist, this is where the vehicle can take over steering, acceleration and braking in certain very specific scenarios. The driver still must remain alert and ready to take control at any time. This is what Tesla told the State of California that its FSD is ranked as.

- Level 3: This stage of automation allows the vehicle to take over complete control of the vehicle under limited conditions and will not engage unless all the requirements are met. The driver must remain awake, however. An example would be local robotaxis and traffic jam chauffeur. Currently there are no Level 3 systems that are legal to operate on North American roads. Honda, Audi, and Mercedes Benz all have level 3 vehicles waiting for regulatory approval.

- Level 4: Considered high-driving automation. Does not need any human interaction while engaged and is programmed to stop itself in the event of a system failure. Since there’s probably no steering wheel or pedals, the driver can sleep in this one. However, severe environmental conditions, like storms, may limit or cancel a level 4 vehicle’s operations.

- Level 5: Known as full driving automation, a level 5 vehicle isn’t limited by geofencing or weather and the only human interaction that is required is setting a destination.

So, where are we?

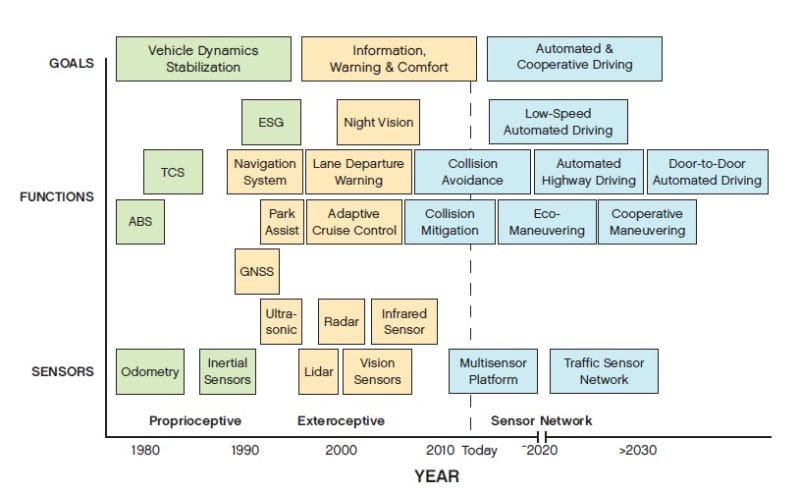

Another white paper put out by Jones Day, a major American law firm, graphs out the history and future of AV technologies:

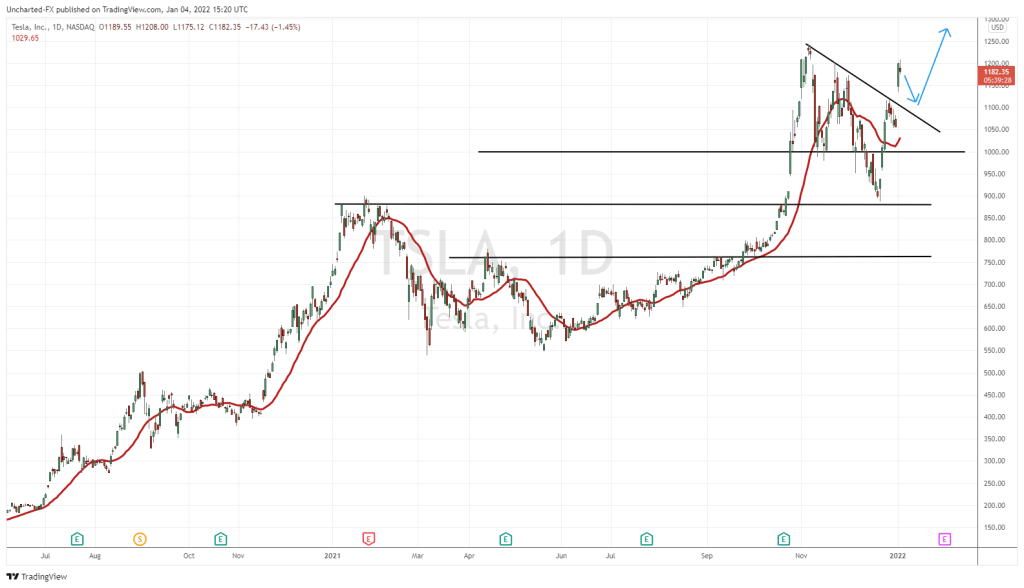

Despite Musk’s optimism, FSD isn’t around the corner after the next software update. In fact, as Steve Wozniak, the co-founder of Apple, puts it, fully autonomous driving is more likely decades down the road, if ever. Why?

Challenges

The hardware may already exist, but the biggest challenge is the software. We have the eyes and the storage capacity, but parsing that mountain of data in real time, then making an informed decision is mind-boggling. Machine learning is considered the best approach due to its iteration processing or ‘thinking outside of the box’ on available data. It’s ability to do that however is rudimentary.

Speed and Specificity

According to Thomas Dietterich, professor emeritus of computer science at Oregon University, in a recent NPR interview, reinforcement learning, where a computer is taught to perform a complex task by giving it rewards and punishments, is particularly valuable to control systems like automated driving. He describes the challenge as thus:

“Methods for reinforcement learning are still very slow and difficult to apply, so researchers are attempting to find ways of speeding them up. Existing reinforcement learning algorithms also operate at a single time scale, and this makes it difficult for these methods to learn in problems that involve very different time scales. For example, the reinforcement learning algorithm that learns to drive a car by keeping it within the traffic lane cannot also learn to plan routes from one location to another, because these decisions occur at very different time scales. Research in hierarchical reinforcement learning is attempting to address this problem.”

Maintenance

Algorithms evolve and need to be maintained constantly to ensure that they don’t develop bad results due to erroneous correlations from changing data. This is most visibly illustrated by the recent YouTube video showing a Tesla, veering into a cyclist.

This means there will be no FINAL algorithm to drive them all. Coders will be constantly updating to account for changing road conditions, driving habits and environments.

Bad data

You may remember the phrase, garbage in, garbage out. If a machine learning algorithm pulls incorrect data points, the result will also be incorrect. Dangerous enough as a single incident, but a machine learning model also has the potential for a feedback loop, where once it develops an incorrect assumption, that assumption could be used to make another decision which would also be wrong, cascading throughout the system for an even more deleterious effect, like ramming an emergency vehicle from behind.

Diagnosis

ML algorithms are notoriously difficult to diagnose once an issue has been identified and the more complex they are, the more difficult to find out what went wrong. For instance, when Google released its Photo app that infamously tagged black people as gorillas, the company spent three years working to fix the app and then finally had to prohibit the tagging of any objects in photos as gorillas, chimps, or monkeys just to make the error go away.

Human Factor

At current levels of autonomy, human interaction is still required. It is this hand-off that comes into question. Given the opportunity to relax, drivers will do so, making them less likely to be attentive and ready to take over when the time comes, creating a dangerous gap in conscious control of the vehicle.

IF/THEN

Okay, let’s just pretend that FSD is ready to take you away on a worry-free trip to your corner-store. What are the cons of such a Wally-esque world?

Modernity

Modernity is defined as “the quality or condition of being modern”. It is the concept of how our modern world impacts our culture, our health, our physicality, and our way of thinking. The animated film, Wally, is a great example of modernity where starship residents are given a life of ultimate ease and as a result, become lethargic, less critical, less physically skilled, etc.

Autonomous driving will bring about an environment where, as a society, we will lose the will and ability to pilot ourselves around independently. We will lose the skills and knowledge of driving, be constrained to known mapped routes, and forever be under surveillance. Speaking of which…

Security

To drive by wire, our vehicles will be connected to vast cloud-based control networks, saving, sharing, and disseminating even more vast amounts of data. Like Facebook, that data will be monetized, leveraged, and sold with or without our consent. Also, as proven by hackers in numerous YouTube demonstrations, networked transportation can be hacked and manipulated in many ways, which include bringing a computer-controlled SUV to a violent stand-still while cruising down the highway.

Legality

According to a white paper published by Fish and Richardson, a leading American IP Litigator, the legal landscape has two considerations when it comes to traditional vehicles:

- Product liability – when the product itself fails such as the exploding Ford Pinto gas tanks back in the 70s

- Tort law – when the accident or incident is caused by driver negligence or unreasonable operation such as speeding or running a red light.

Things become sticky when you attempt to apply this legal framework to AVs. Since AVs are designed to remove human interaction, thus human error, there is much more to consider when going to court. The paper describes these issues in detail:

- Types of vehicles involved in the collision: Non-AV accidents are typically litigated under tort law, whereas AV accidents may be litigated primarily under product liability law. It is unclear how courts will handle AV/nonAV accidents, which may not fall squarely under either tort law or product liability law.

- Causation: The different types of causation in accidents involving AVs may result in differing liabilities. Complex forensic and technical investigation may be needed to determine the cause of the accident—i.e., whether the accident was caused by human error or defect of the AV.

- Fault allocation: Ultimately, the individual or entity to receive fault will likely depend on who or what had control over the condition causing the accident. Courts may presume that the passenger is not at fault when traveling in a level 4 or 5 AV.

- Supplier liability: AVs include copious specialized components with important roles for autonomous driving functions provided by a variety of suppliers. All these component suppliers may be subject to product liability litigation.

- Cybersecurity/product liability: The deployment of AVs carries severe cybersecurity concerns. For example, if a hacker gains access to AV functions and causes an accident, the software developer may be subject to product liability based on vulnerabilities in the software.

- Indemnity: To build consumer confidence, some AV manufacturers have indicated that they will accept liability for accidents involving their vehicles. However, it is unclear how and to what extent indemnification would be offered.

As you can tell, AVs create a legal quagmire when it comes to responsibility. Not to say these issues are insurmountable, but full automation will certainly make the process more complicated and open to interpretation.

A safer world?

AV cheerleaders chant that human error is a factor in up to 90% of crashes and since autonomous vehicles remove the human, they should reduce crash rates and insurance costs by 90%. This is an infinitely reductive way of interpreting the situation. The Victoria Transport Institute take a less blindly optimistic approach and outlines potential issues of an all-AV world:

- Hardware and software failures

- Malicious hacking

- Increased risk-taking as drivers feel safer and more apt to take bigger risks

- Platooning risks, although driving in groups and close quarters may reduce traffic congestion, it does elevate risks of a larger incident, especially if non-AV vehicles join traffic.

- Increased total vehicle travel due to riders preferring to take their own transportation and read a book over taking a bus and standing in the aisle.

- Risks to non-vehicle drivers such as motorbike, bicycle and pedestrians not being picked up by sensors or interpreted properly by software.

- Reduced investment in conventional safety strategies

- Higher vehicle repair costs due to hardware and energy storage, especially in post-accident repair

More sober studies such as the one carried out by Mueller, Cicchino and Zuby in 2020, put the accident prevention of AVs closer to 34% and perhaps a little more with complete implementation, but nowhere near the touted 90%.

Again, more sane analysts place the ubiquitous adoption of affordable AVs somewhere in the late 21st century with an 80% saturation level.

Future of autonomous driving

Luckily, FSD isn’t the only game in town. AV technology is being advanced by some major auto manufacturers and tech developers including:

- GM

- Waymo

- Daimler-Bosch

- Ford

- Volkswagen Group

- BMW-Intel-FCA

- Renault-Nissan Alliance

- Volvo-Autoliv-Ericsson-Zenuity

- PSA

So, what’s this mean for FSD?

Despite claims otherwise, it is one of many and not the best in class. FSD may one day succeed, but that day is a long way off and it would be in everyone’s best interest if its progress were better regulated, and Tesla were transparent regarding its shortcomings. These aren’t moral-based suggestions, they are hinged on public safety and truth in advertising which should trump Musk’s need to be first out the door.

–Gaalen Engen